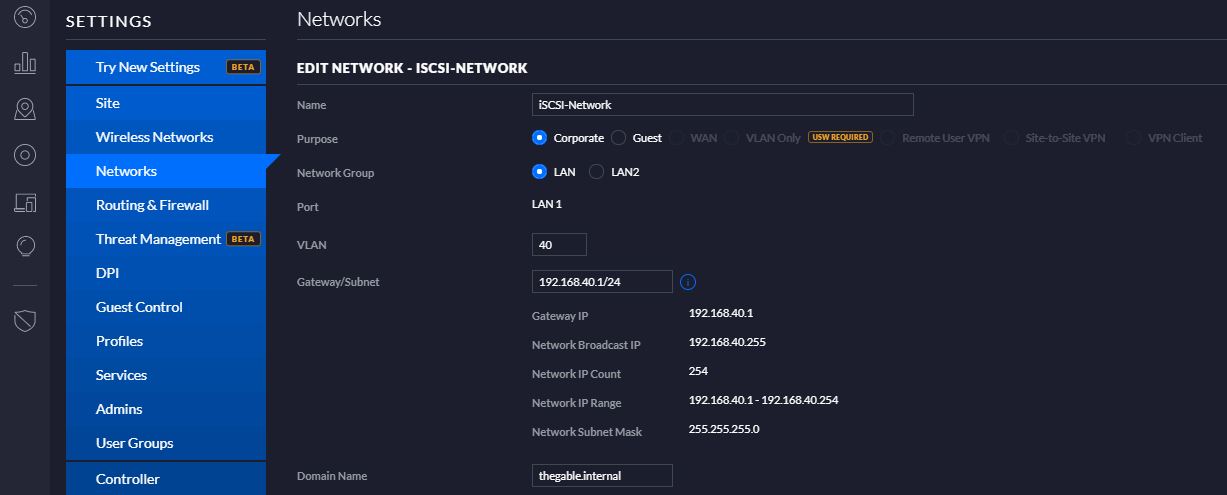

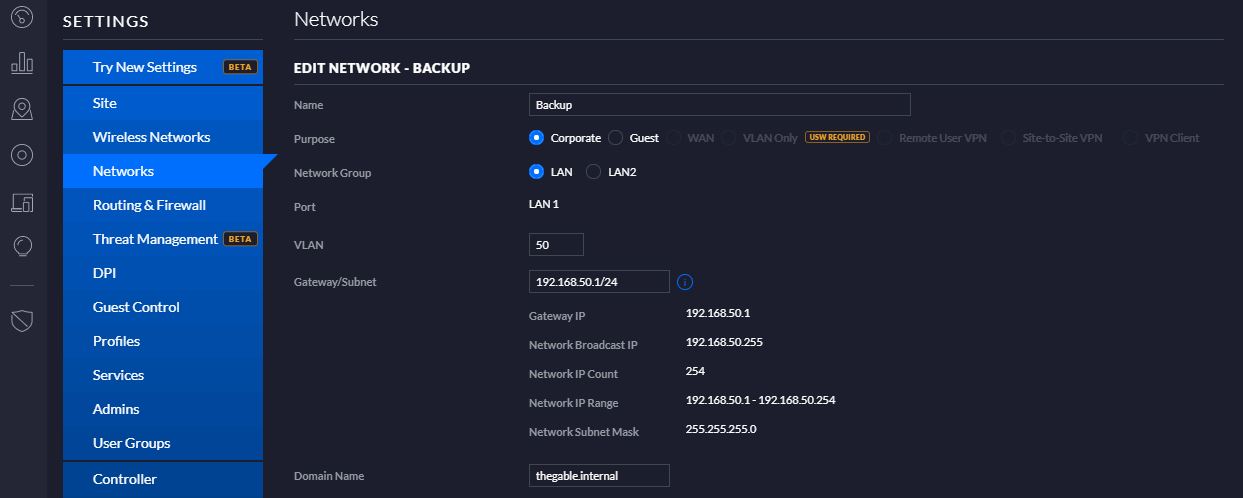

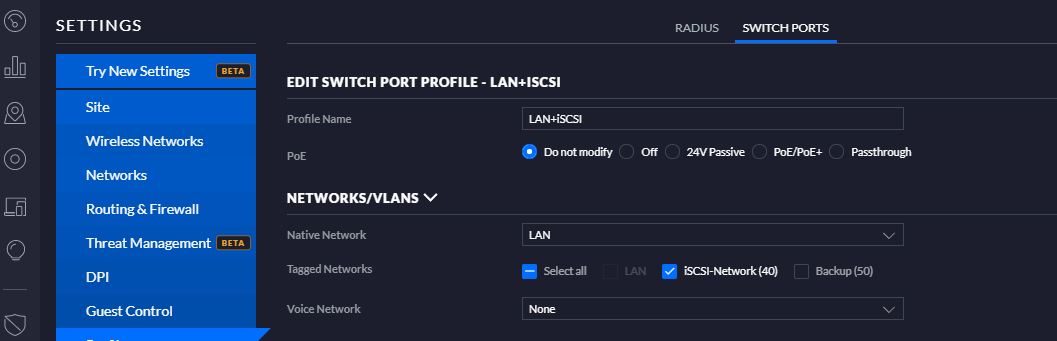

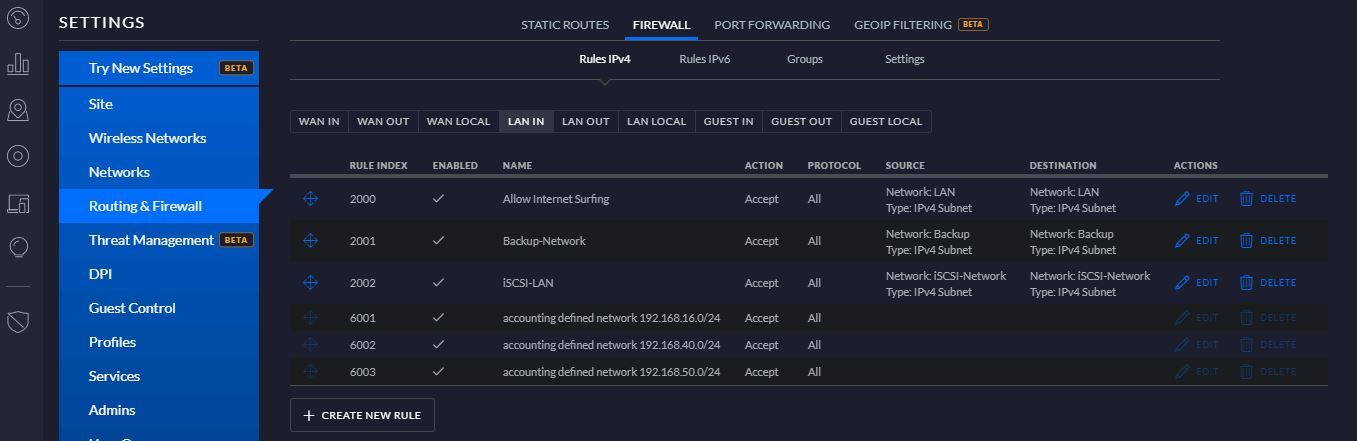

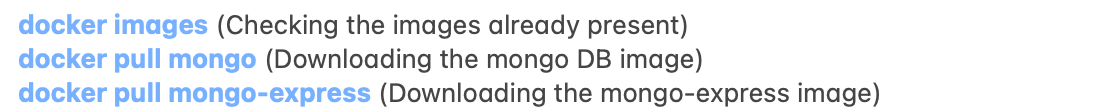

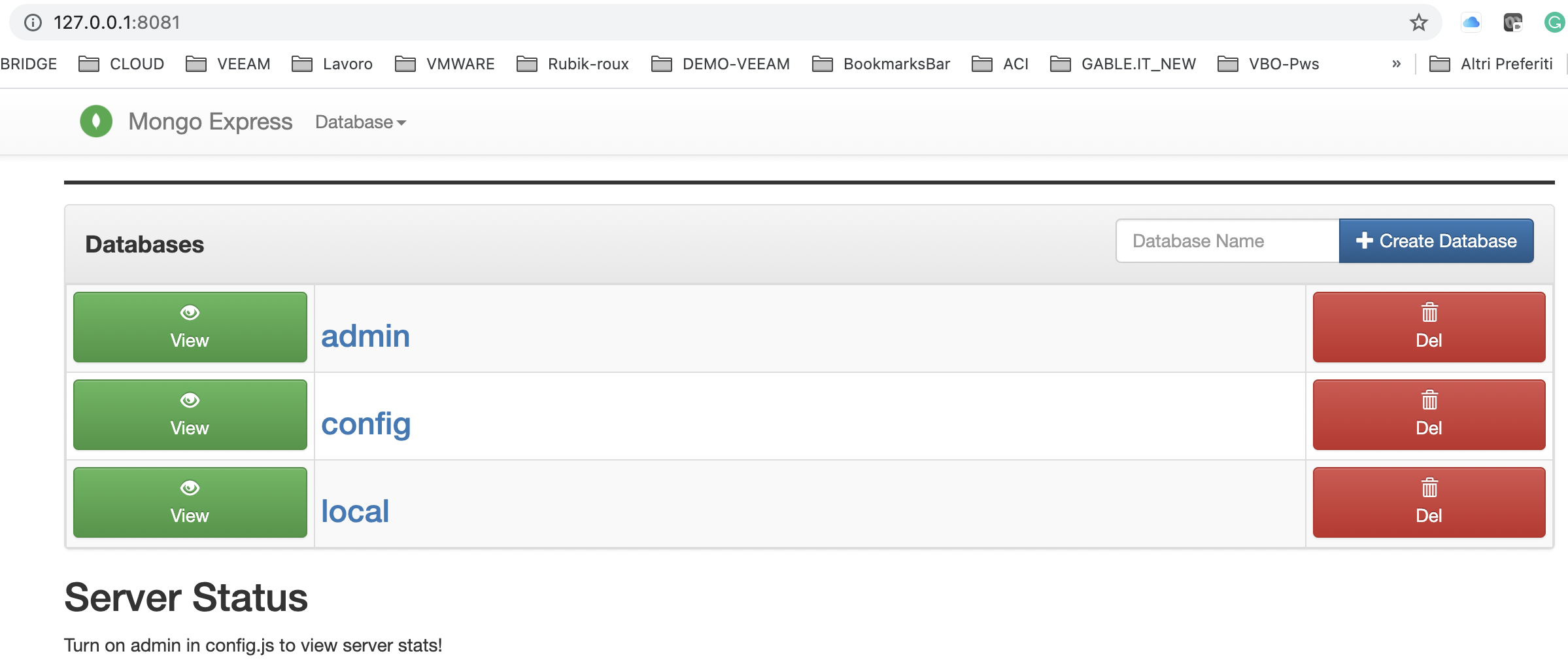

Questo nuovo articolo vuole indicare le nuove opportunità di lavoro create da un ambiente cloud-native.

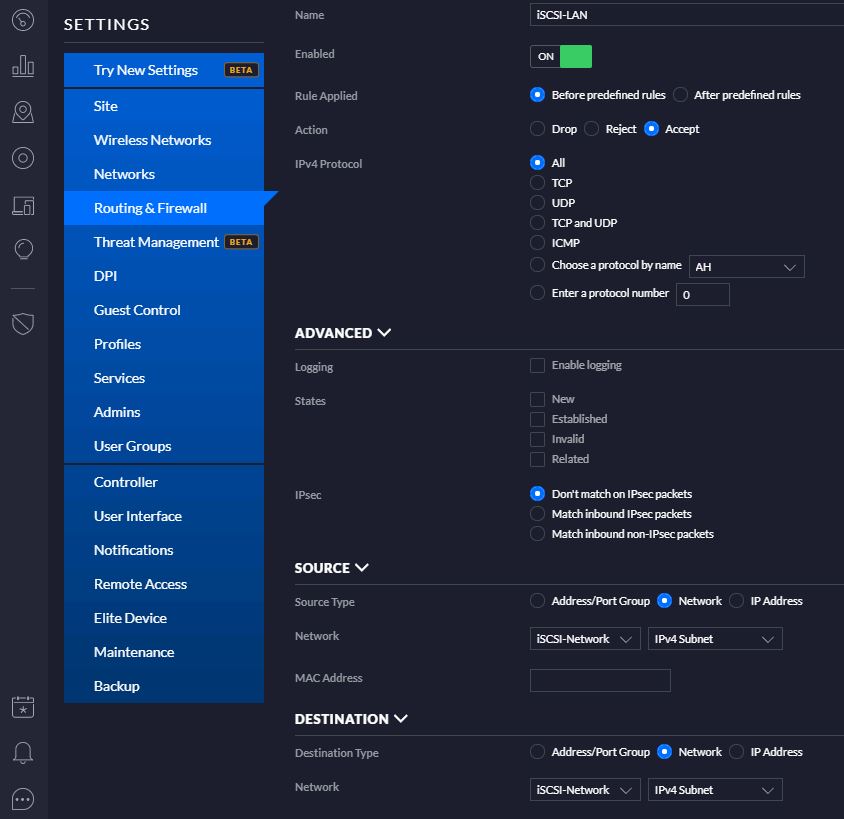

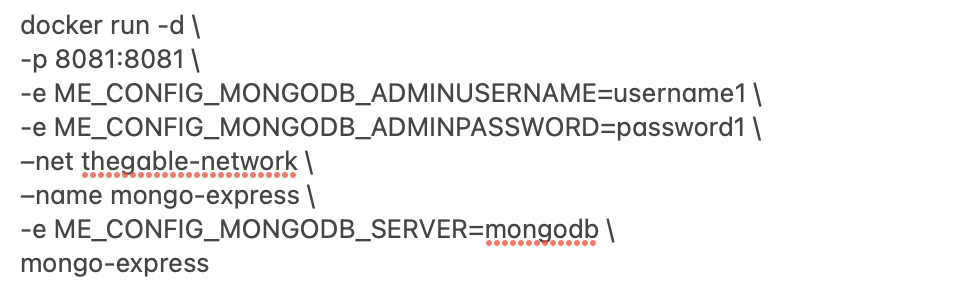

L’immagine n°1 mostra i quattro livelli principali necessari all’architettura per funzionare correttamente (parte rettangolare sinistra).

Sul lato destro (cerchi) sono rappresentati le occupazioni dell’operatore rispetto ad ogni singolo livello.

Immagine 1

Immagine 1

Dal basso verso l’alto:

1- Gli Storage and Network Operator (SNO) hanno la responsabilità di gestire l’architettura hardware.

Il numero dell’attività del ruolo può diminuire in caso di implementazione in un cloud pubblico o IaaS (Infrastructure as a Service)

2- L’Operatore del sistema operativo (OSO) lavora al livello del sistema operativo in cui viene eseguito il servizio k8s.

L’OSO ha bisogno di competenza in Linux e Windows. Sono spesso richieste competenze nell’architettura di virtualizzazione come VMware, RedHat, Nutanix, ecc.

Se l’architettura è stata affittata dal cloud pubblico o in generale in un IaaS, le competenze devono coprire questa nuova architettura.

3- L’operatore di orchestrazione (OO) lavora con il nucleo dell’ambiente di amministrazione cloud-native. Questo mondo ha bisogno di molte nuove abilità.

L’automazione è il figlio dell’orchestrazione.

Il concetto principale è che l’OO dovrebbe avere competenze sufficienti per riuscire a seguire tutti i processi di “Integrazione Continua” e “Consegna Continua” (spesso chiamata CI/CD).

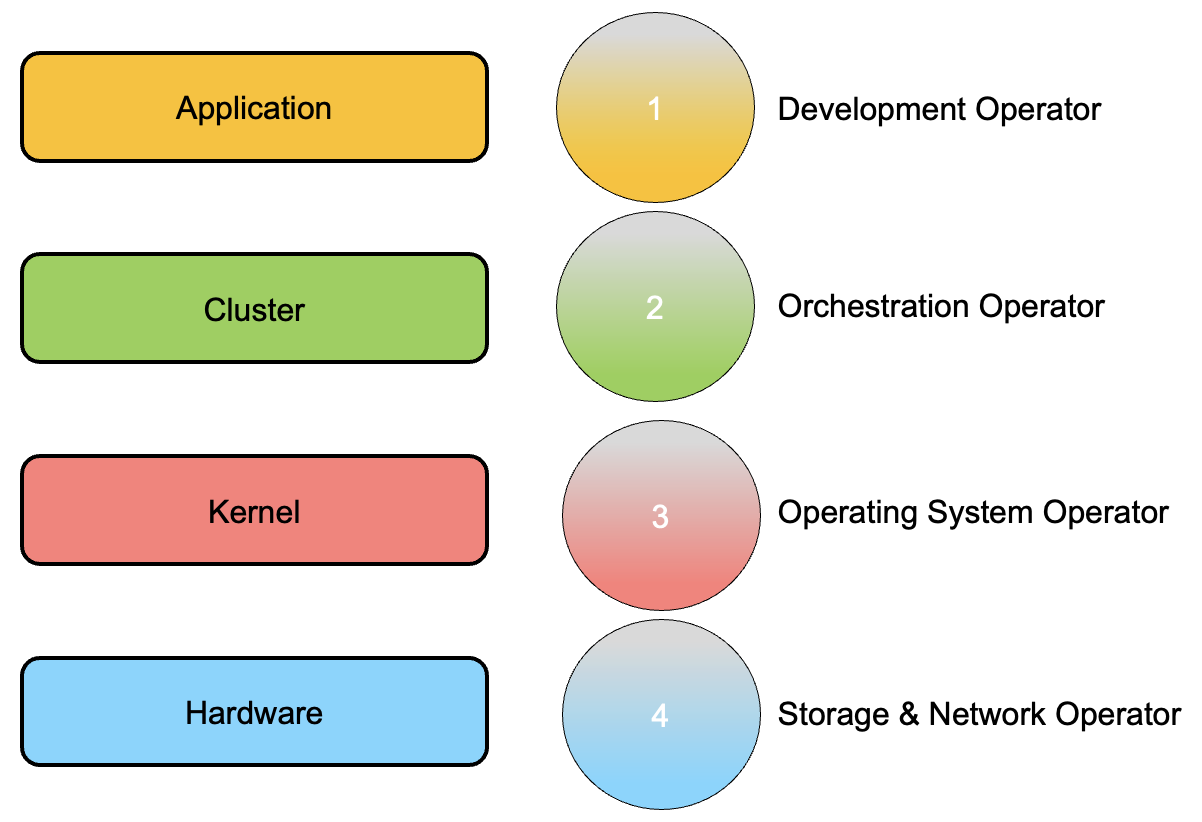

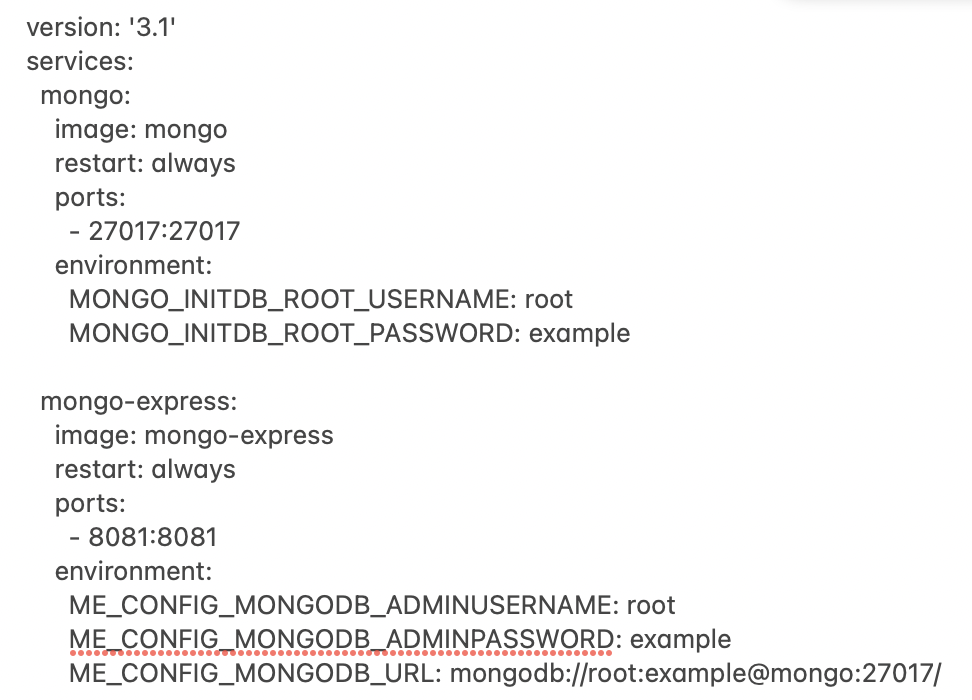

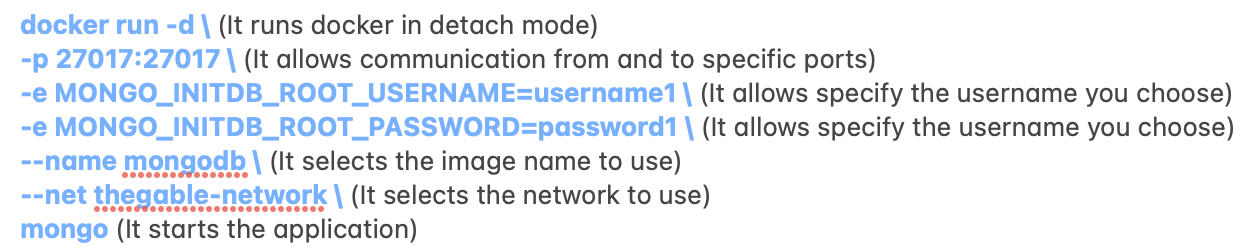

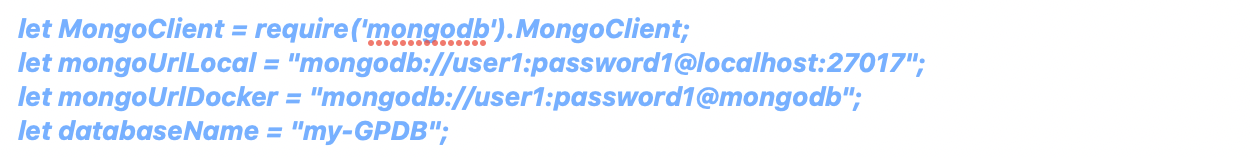

L’immagine 2 dà un’idea a riguardo

Le frecce centrali mostrano il flusso per consentire l’erogazione di un servizio.

Per ogni singola freccia ci sono nuovi strumenti da conoscere per gestire l’intero rilascio del servizio.

Solo alcuni esempi: per testare l’ambiente è possibile lavorare con cetriolo o Cypress.io, per la distribuzione e la costruzione è possibile utilizzare Jenkins… e così via…

Immagine 2

Immagine 2

Nota 1: ci sono così tante piattaforme disponibili che la scelta di quella giusta potrebbe essere molto impegnativa

4- L’operatore di sviluppo è il ruolo delle persone che sono scritte righe di codice. Spesso usano software per gestire attività come Jira Core e Trello.

Nota 2: A mio parere personale, il fornitore che crea un livello software in grado di gestire centralmente tutte queste 6 attività principali avrà un vantaggio competitivo rispetto ai concorrenti.

I grandi fornitori stanno già giocando: RedHat sta lavorando dall’inizio con la sua piattaforma (OpenStack), VMware ha rilasciato Tanzu, Nutanix con Carbonite e Microsoft giocherà il suo ruolo con la nuova versione di Windows 2022.

L’unico buon suggerimento che posso darti è quello di studiare questo nuovo e fantastico mondo.

A presto e abbi cura di te

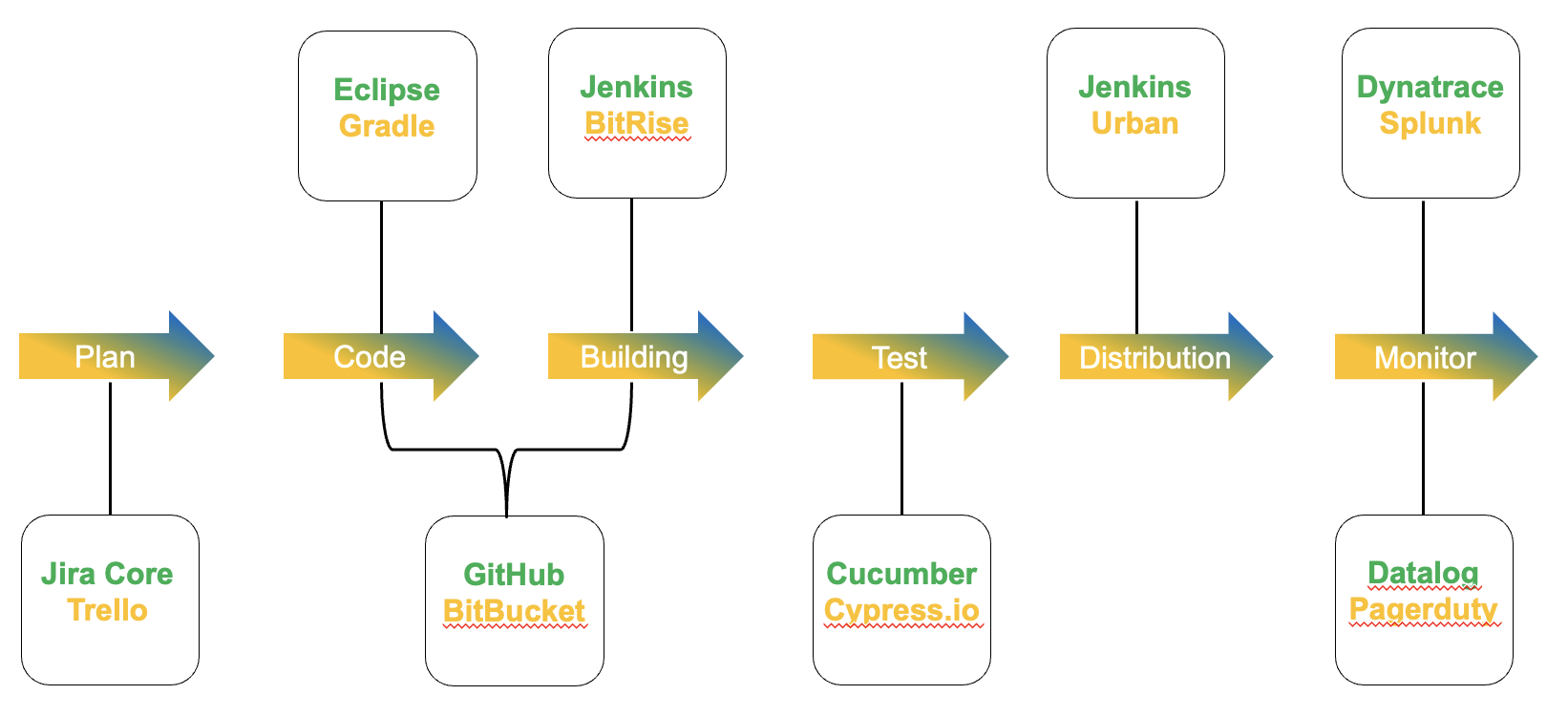

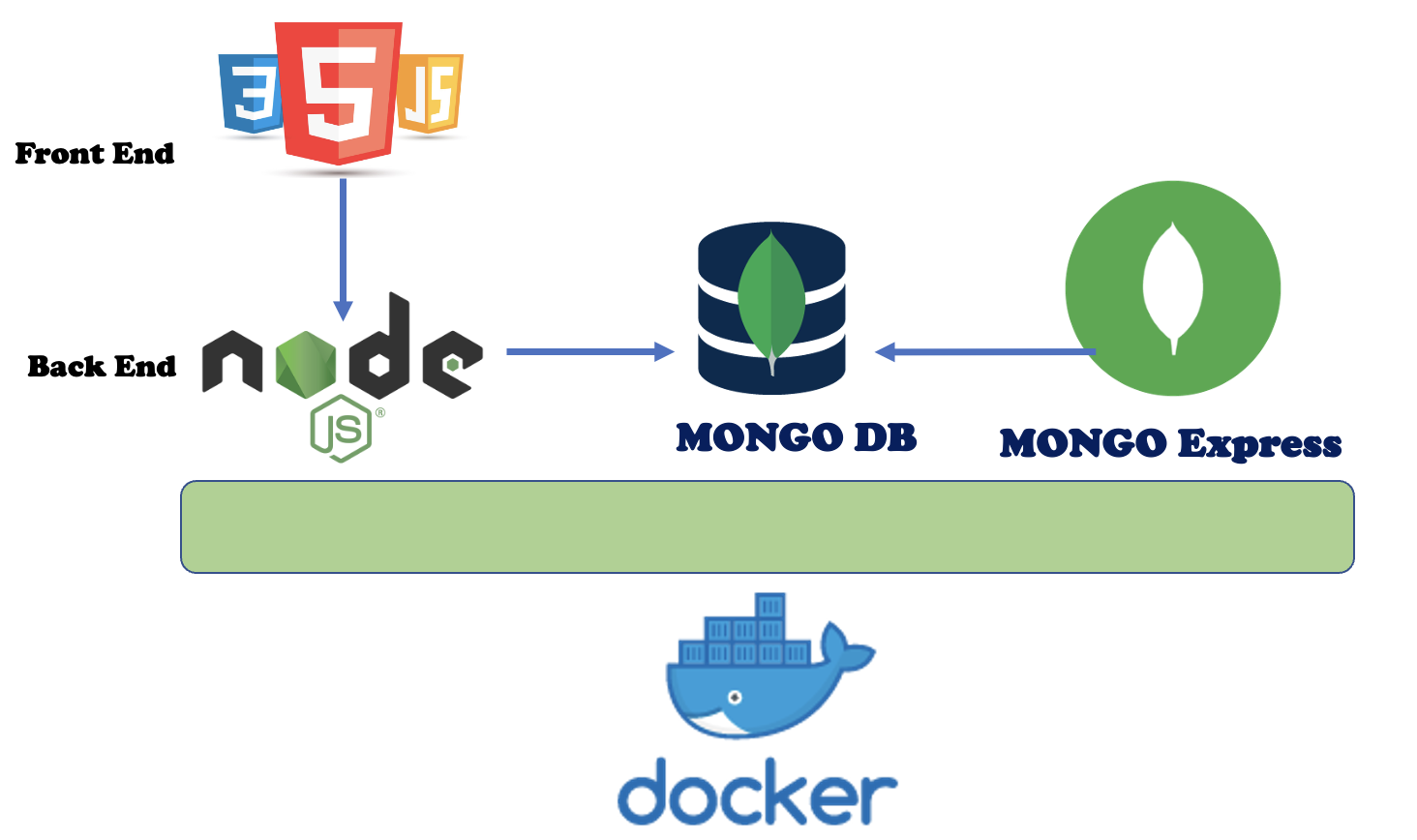

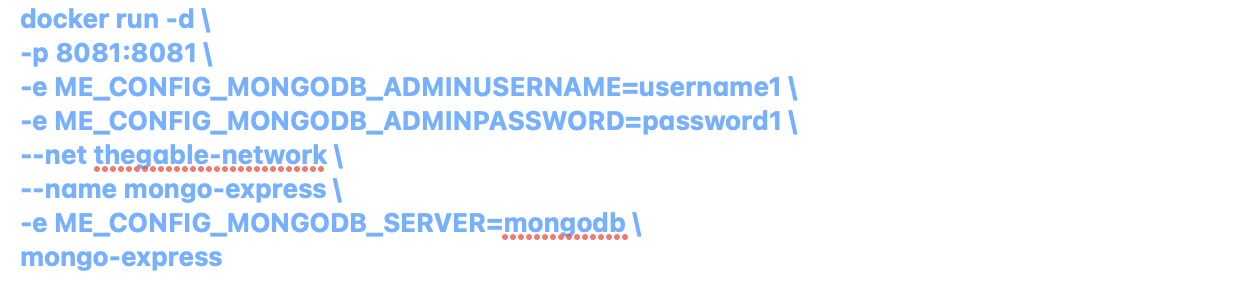

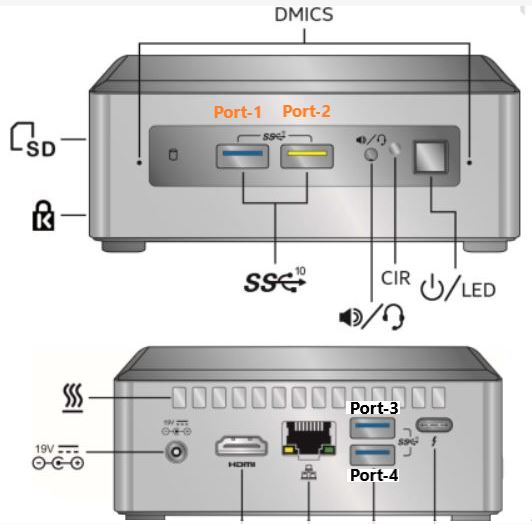

mypersonalfile.yaml

mypersonalfile.yaml Picture 1

Picture 1

Picture 1

Picture 1

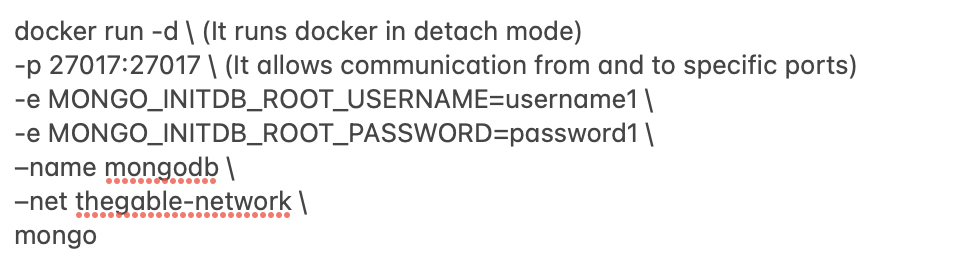

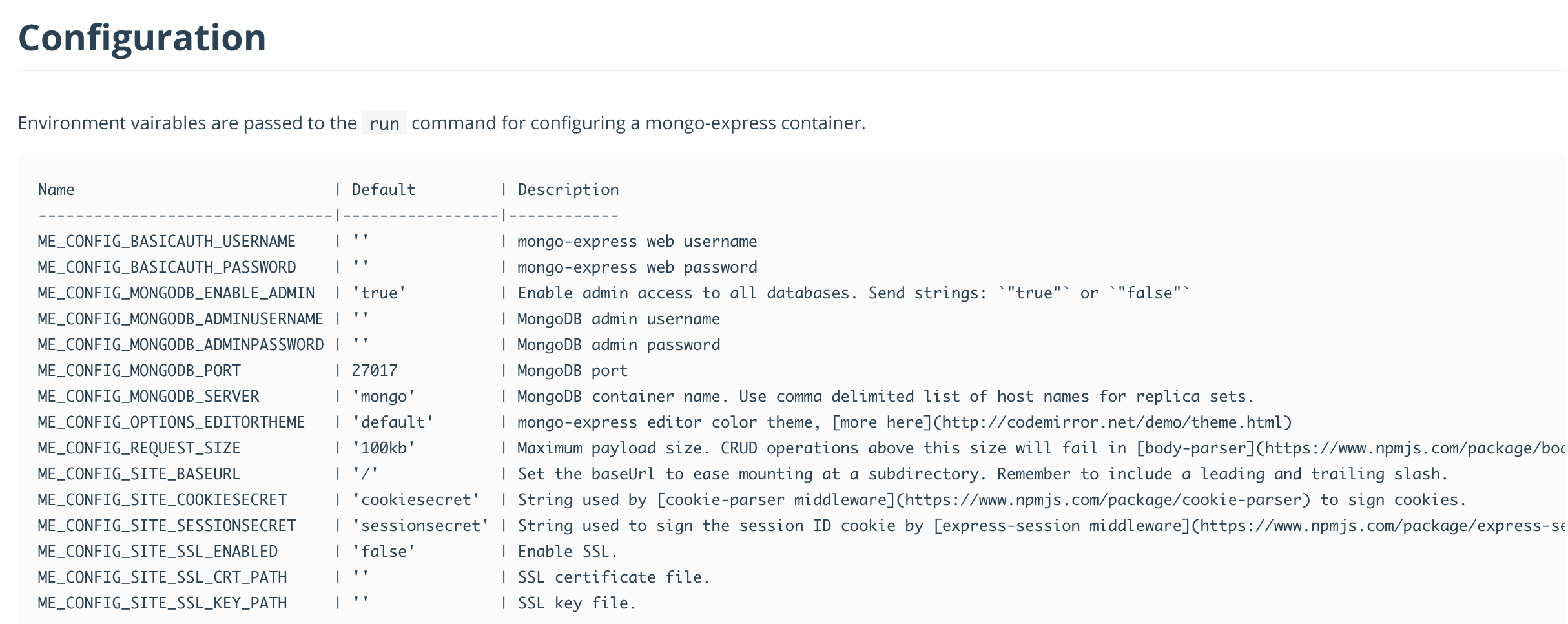

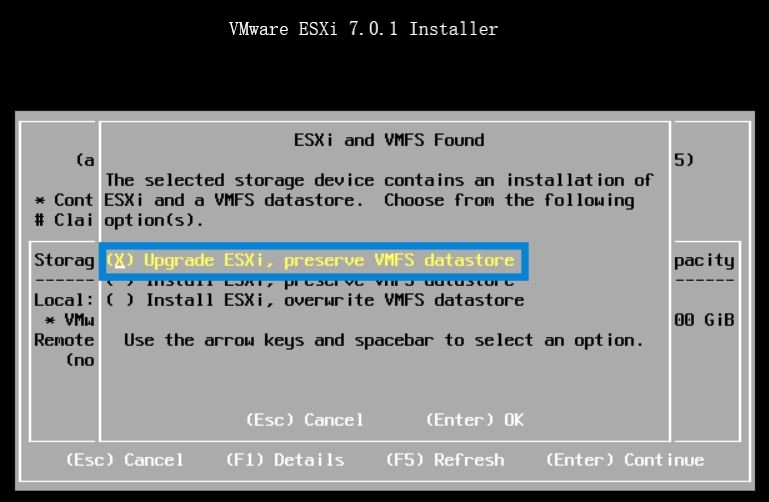

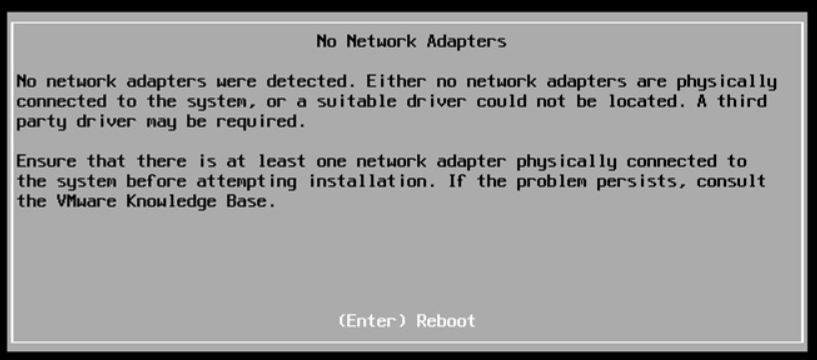

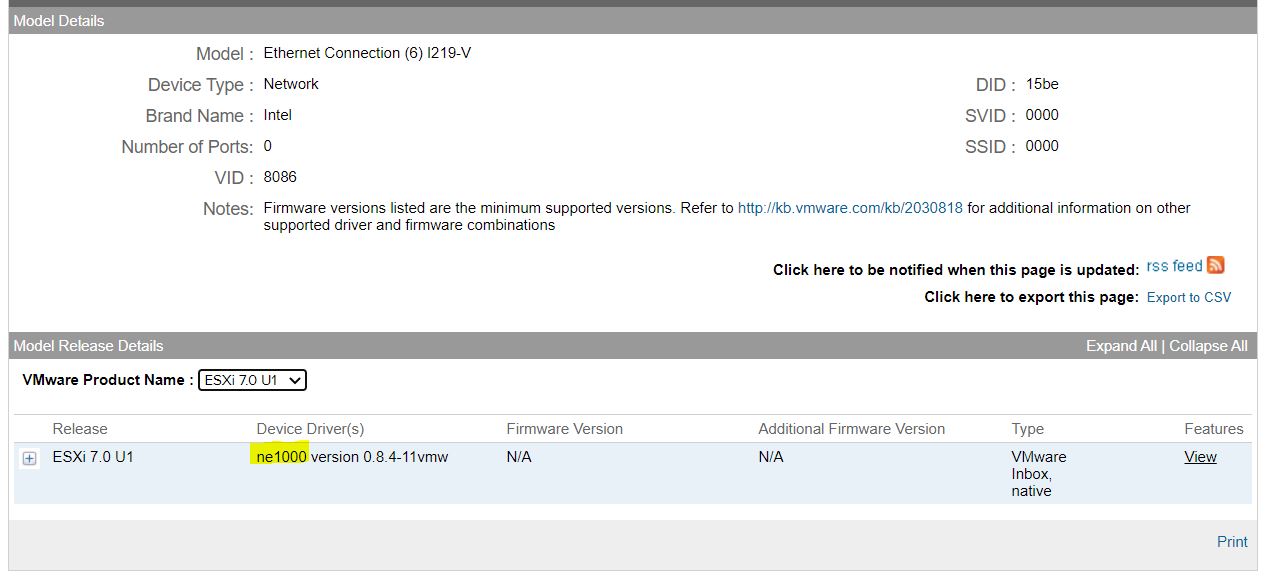

Last weekend I upgraded all my Servers to the last VMware ESXi release (7.0.1 C) and this article is meant to describe all steps I performed.

Last weekend I upgraded all my Servers to the last VMware ESXi release (7.0.1 C) and this article is meant to describe all steps I performed. Picture 1

Picture 1 Picture 2

Picture 2